Every email marketer has wondered: “Should I try a different subject line? Would a personal sender name work better? What if I send this at 2 PM instead of 10 AM?”

These questions used to mean guessing, crossing your fingers, and hoping for the best.

Email experiments (also known as A/B testing) turn those guesses into data-driven decisions. Instead of wondering what might work, you’ll know what works because your subscribers show you with their opens and clicks.

Running experiments delivers three major benefits:

- Higher open rates when you test subject lines and sender names

- More clicks when you test content, calls-to-action, and offers

- Better overall performance by continuously improving based on real subscriber behavior

The best part? There’s basically zero risk. You’re not betting your entire campaign on a hunch—you’re testing small tweaks with part of your list, then rolling out the winner to everyone else.

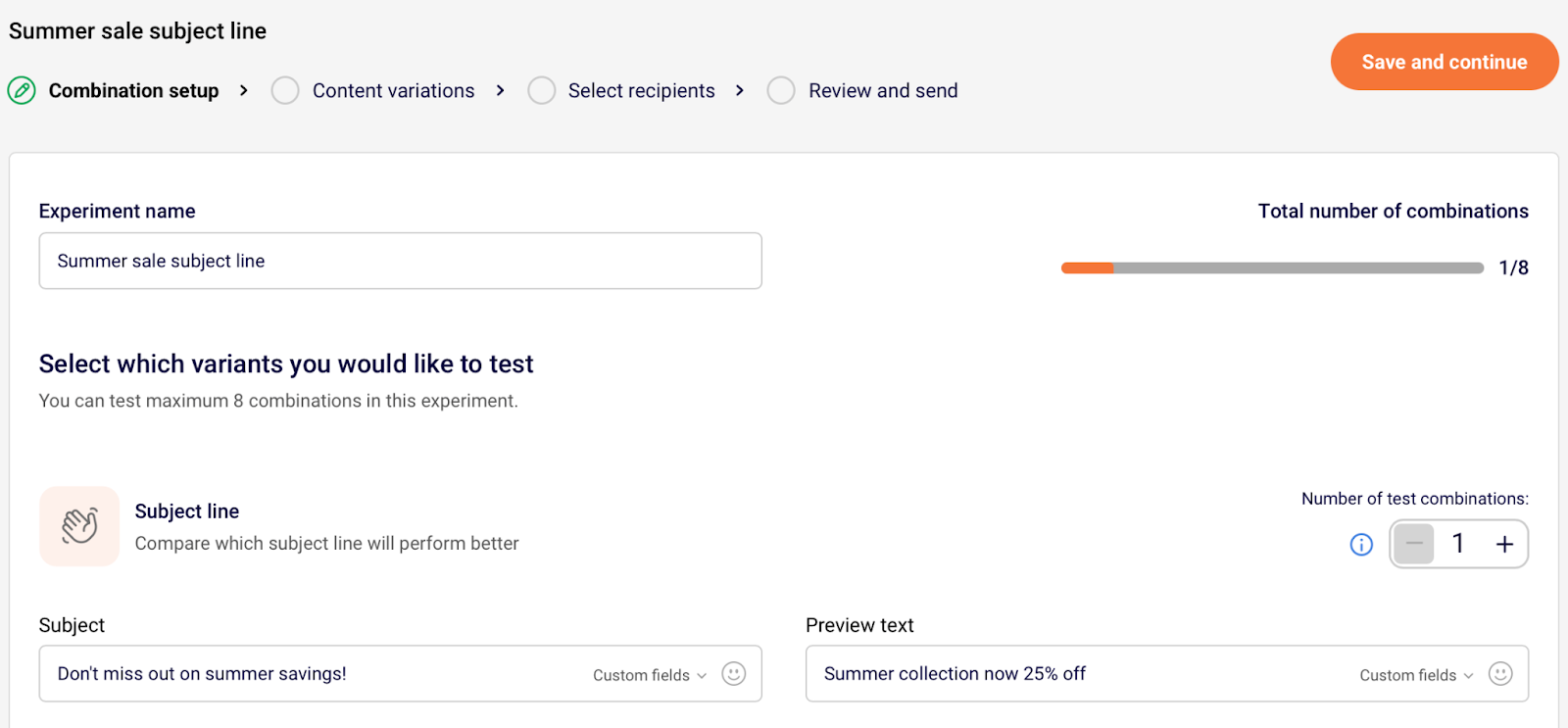

Easily test as many as 8 variants using Experiments.

Best Practices for Email Experiments

Running successful email experiments isn’t rocket science, but some rules will save you from headaches later.

General Rules

Before diving into specific test types, follow these fundamental principles:

Test one variable at a time.

Change your subject line AND your call-to-action, and you’ll have no clue which one actually moved the needle. You need to isolate variables to understand what’s working.

Note: Multivariate testing is recommended only for contact lists over 40,000 subscribers.

Set a clear goal.

Sounds obvious, but you’d be surprised how many people just… start testing without thinking about whether they want opens, clicks, or conversions.

Ensure statistical significance.

Your test group needs enough subscribers to produce reliable results. Small lists should test with at least 50% of subscribers, while larger lists can test with smaller percentages.

Run tests for the right duration:

- Small list (up to 5,000 subscribers) → Run for up to 24 hours

- Medium list (5,000–20,000 subscribers) → Run for around 12 hours

- Big list (20,000+ subscribers) → Run for 4–8 hours

Note: Don’t test during low-activity periods. Weekend tests? Holiday tests? You’re not getting accurate data because people’s behavior is completely different at this time.

Limit your variations based on list size

- Small list → Stick to 2 variations

- Medium list → Try 3 variations

- Big list → Test more complex setups

Document spin-offs

Keep a record of your tests and results to build knowledge over time and use it for your future campaigns.

What You Can Test (and What to Aim For)

Sender’s Experiments feature lets you test multiple elements across your campaigns. The key is aligning your test with a clear goal:

- Want higher open rates? Test subject lines or sender names;

- Need more clicks? Test your call-to-action, layout, offer, or content blocks;

- Concerned about deliverability? Test your “from” address or compare plain text vs. HTML emails.

Subject Line & Sender Name Experiments

These experiments focus on the elements subscribers see before opening your email, making them crucial for improving your open rates.

Subject Line Testing

Your subject line is doing one job: getting that email opened. Everything else is secondary. Here’s what actually makes a difference, in order of “test this first”:

Emotional vs. informational tone

- Emotional: “Don’t miss out on summer savings!”

- Informational: “Summer collection now 25% off”

Length variations

- Short: “Flash sale ends tonight”

- Long: “Last chance to save 40% on everything in our summer collection”

Personalization vs. generic

- Personalized: “Sarah, your cart is waiting”

- Generic: “Complete your purchase today”

Plain text vs. emoji

- Just text: “Check out this summer’s hot takes”

- With emoji: “Check out this summer’s hot takes 🥵”

Question vs. statement

- Question: “Ready for your best spring yet?”

- Statement: “Your spring transformation starts now”

Pro tip. Questions can work really well because they make people’s brains want to answer them.

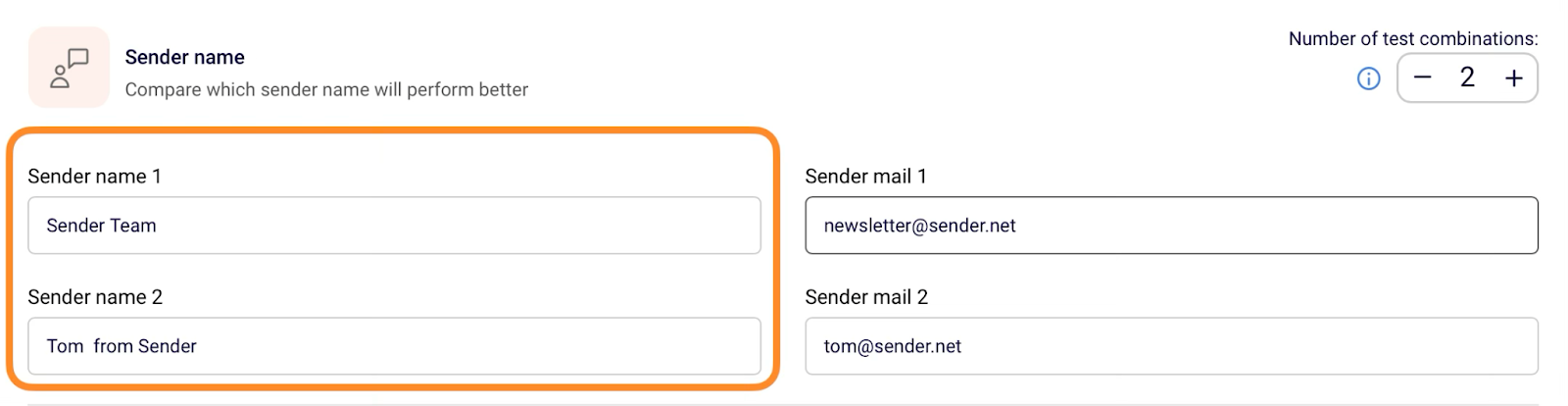

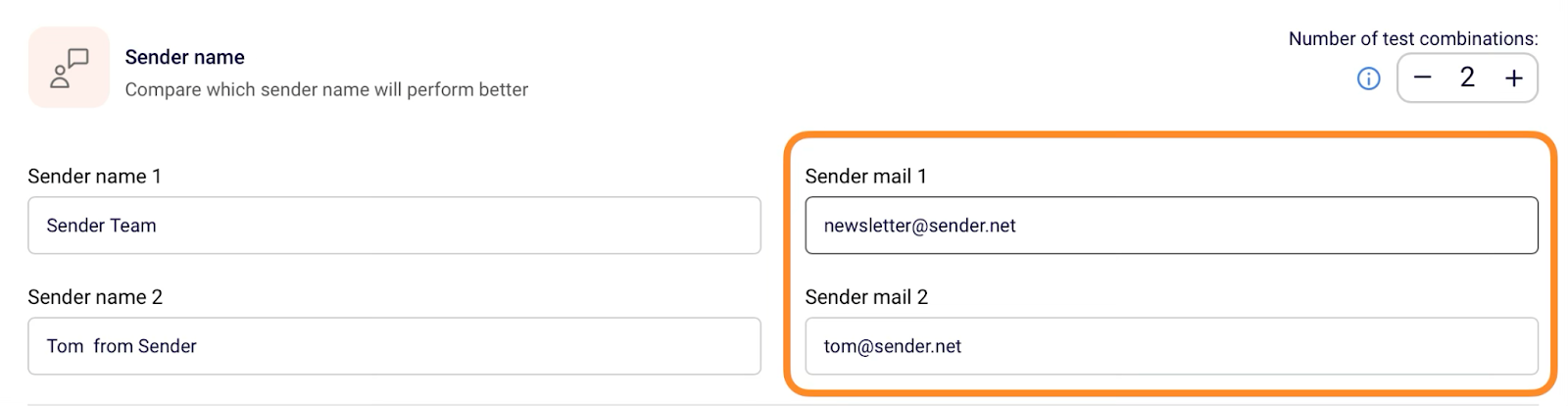

Sender Name Testing

Your sender name shows up right next to that subject line, making it equally important for building trust and recognition.

So, try testing these variations:

Brand name vs. personal name

- Brand: “Sender Team”

- Personal: “Tom from Sender”

Department vs. individual

- Department: “Customer Success Team”

- Individual: “Lisa from Customer Success”

Note: Personal names often perform better because they feel more human and trustworthy.

“Reply-To” Address Testing

Testing your reply-to address can significantly impact both subscriber engagement and email deliverability across different providers.

Better to test than be sorry:

Generic vs. personal email

- Generic: info@yourcompany.com

- Personal: tom@yourcompany.com

No-reply vs. real address

- No-reply: noreply@yourcompany.com

- Real: support@yourcompany.com

Note. Real reply-to addresses usually win because they signal you’re actually interested in conversation, not just broadcasting into the world.

Important. Recent Outlook regulations emphasize the importance of using real email addresses that can receive replies in the “From” and “Reply-To” fields. No more “noreply@email.com” approaches—this change affects deliverability and inbox placement across major email providers.

Sending Time Experiments

Here’s where things get interesting. Timing can completely make or break your campaign, and it varies wildly by audience.

Day of the Week

Different audiences check email on different days, making it essential to test when your specific subscribers are most active.

B2B audiences

We recommend testing Tuesday through Thursday during business hours, since your subscribers typically check email at work and make business decisions during the week.

B2C audiences

Consider testing evenings and weekends when people have more personal time to browse and shop.

Time of Day

The hour matters just as much as the day, and this is where industry knowledge comes in handy. Take the following into consideration when testing sending times:

Morning vs. evening

- Morning: 8-10 AM (the “check email with coffee” crowd)

- Evening: 6-8 PM (post-work, personal time)

Lunch break timing

- Test 12-1 PM when people are scrolling during lunch

Industry-specific timing

- Restaurants: Try 3-5 PM when people are thinking about dinner

- Fitness: Early morning or right after work when motivation strikes

Content Variation Experiments

These experiments test what happens after someone opens your email, focusing on driving clicks and conversions through design and messaging changes.

However, be patient when testing content variations–test only one thing at a time. Otherwise, you’ll end up lost thinking which change really worked.

Design & Visuals

No matter your business type, visuals create that crucial first impression once your email loads. Get it wrong, and people bounce faster than you can say “unsubscribe.”

Hero image variations

- Product-focused image vs. lifestyle image

- Single product vs. multiple products

- Image vs. no image

Email format

- HTML-designed email vs. plain text (especially effective for B2B, events, and local businesses)

- Simple layout vs. complex design

Visual elements

- Countdown timer vs. no timer

- Signature with photo and name vs. no signature

Offer & Call-to-Action

Your offer and call-to-action directly impact conversions, making these some of the most valuable elements to test for improving campaign ROI.

Offer type

- Free shipping vs. percentage discount (e.g.,”Free delivery” vs. “20% off”)

- Dollar amount vs. percentage (“Save $50” vs. “Save 20%”)

CTA variations

- Button text: “Shop Now” vs. “Get My Discount” vs. “Start Saving”

- Button color: Brand color vs. contrasting color

- Button shape: Rounded vs. square corners

- Number of CTAs: Single-focused CTA vs. multiple options

Emotional Appeal

Different feelings motivate different people, and testing emotional triggers helps you figure out what gets your specific audience moving.

Test different psychological triggers:

- Urgency: “Sale ends tonight” vs. no time pressure

- Exclusivity: “Members only” vs. open offer

- Social proof: “Join 50,000+ customers” vs. no social proof

- Fear of missing out: “While supplies last” vs. no scarcity

Social Proof Elements

Sometimes people need to see that others trust you before they’ll take the leap themselves.

Consider trying these approaches:

With vs. without social proof blocks:

- Client logos and “Trusted by X companies”

- Customer testimonials

- Review ratings and counts

- User-generated content

Layout Testing

How you organize everything affects whether people can find what they’re looking for, especially on phones (where most people read email these days).

Text formatting

- Font size (especially important for mobile readers)

- Paragraph length: Short paragraphs vs. longer blocks

- Bold vs. regular text for key points

Content organization

- Product benefits listed first vs. features first

- Single column vs. two-column layout

- Navigation menu vs. no menu

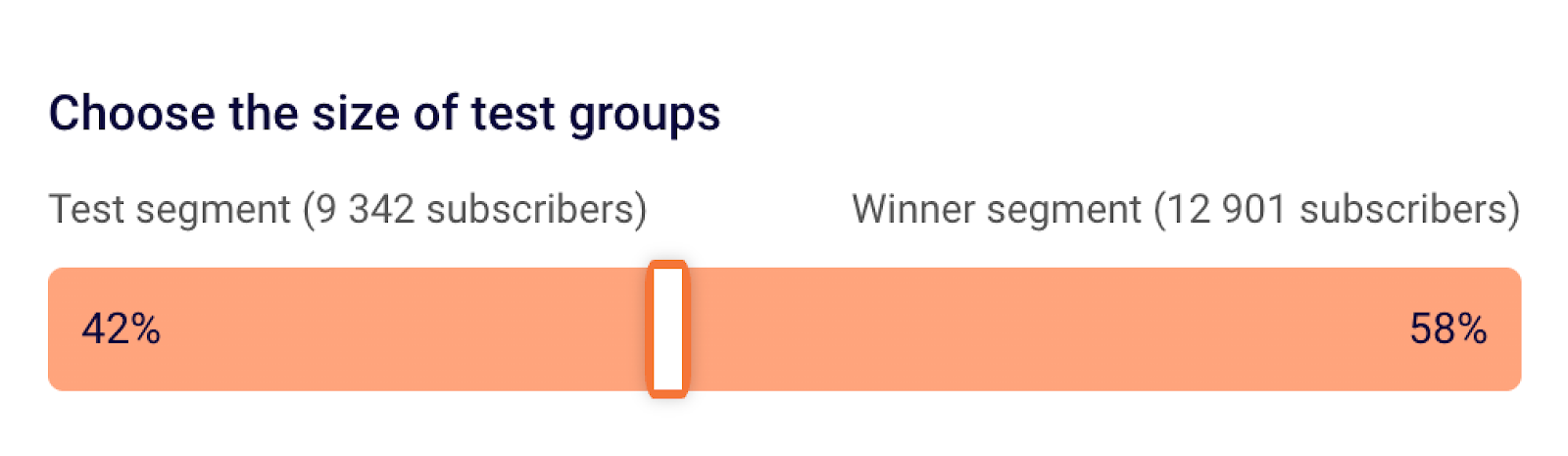

Pro Tip: Use Test Group & Winning Group Wisely

Getting your test group size right is crucial for getting reliable data while still reaching as many people as possible with your winning variation.

Sender’s Experiments feature lets you control what percentage of your list receives the test vs. the winning variation. Here’s how to optimize your split:

| Audience size | Recommended test group | Why this works |

| < 10,000 | At least 50% | Smaller lists need larger test groups for reliable data |

| 10k–100k | 20–30% | Medium lists can get good results with moderate test sizes |

| 100k+ | 10–20% | Large lists generate reliable data quickly with smaller test groups |

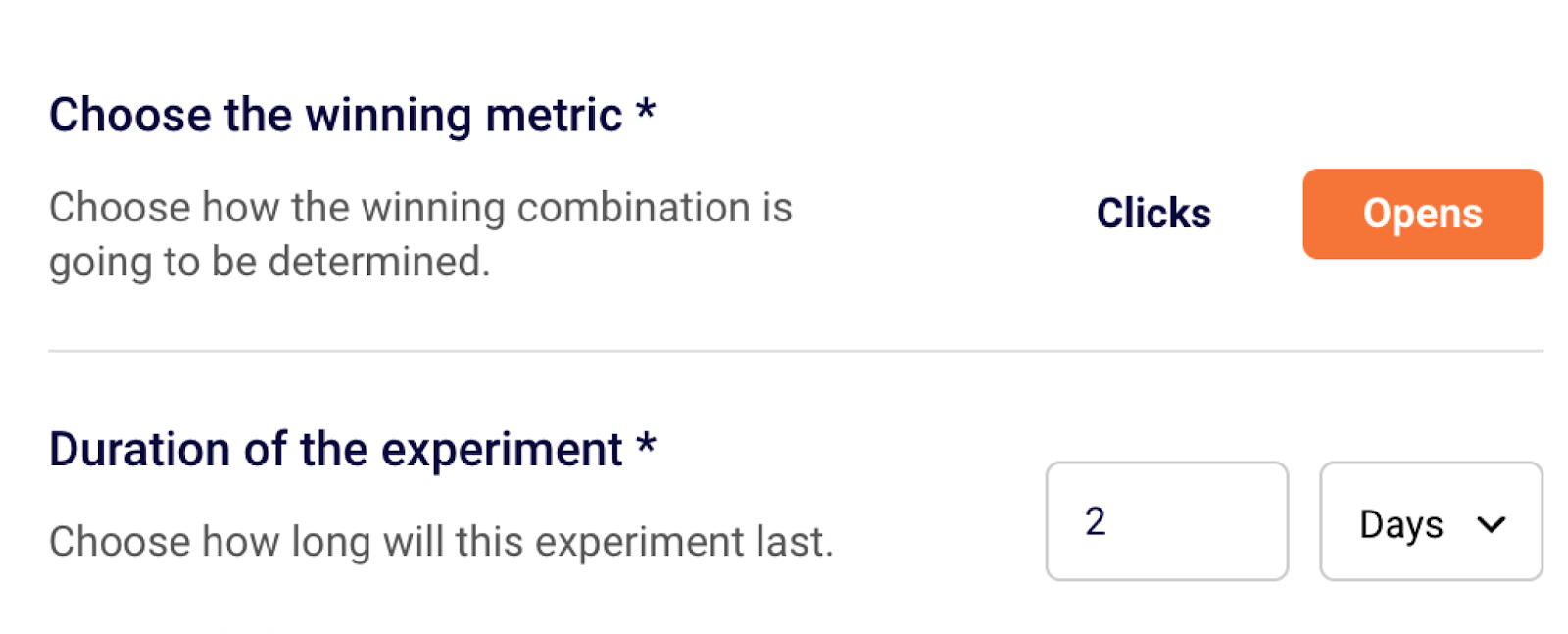

Winning metric selection:

- Choose Opens when testing subject lines, sender names, or send times

- Choose Clicks when testing email content, layout, CTAs, or offers

Remember: larger audiences produce reliable results faster, which means you can run shorter tests and use smaller test groups.

Advanced Testing Strategies

Once you’ve gotten comfortable with the basics and have a few wins under your belt, these strategies can help you dig deeper:

Sequential testing

Win one test, implement it, then test the next thing. Build your improvements systematically instead of trying to fix everything at once.

Seasonal testing

That subject line that killed it in January might flop in July. Seasonal testing keeps you relevant.

Audience segment testing

Your new subscribers might respond totally differently than your longtime customers. Test the same thing across different groups.

Multi-step testing

Test big concepts (emotional vs. rational) before drilling into specifics (which emotional words work best).

Ready to see what your audience really wants? Head over to Sender’s Experiments feature and start your first test: